MINST (Modified National Institute of Standards and Technology) 是计算机视觉方向最基础的数据集之一,自从1999年发布以来,这个手写图像数据集就成为了基准分类算法的基础。

TensorFlow 1.x版本由于其较为复杂的机制(如Session等),与Pytorch相比编程的难度较高。于是2019年秋TensorFlow正式发布了2.0版本,此次更新并不向下兼容,更像是一个全新的框架——在2.0版本中,Keras被整合成为了TensorFlow的一个高级API,使用Keras仅数十行便可以完成一个简单的神经网络搭建。

本文将基于 TensorFlow 2 的 Keras 框架进行MINST手写数字识别的相关讨论与探究。

问题描述

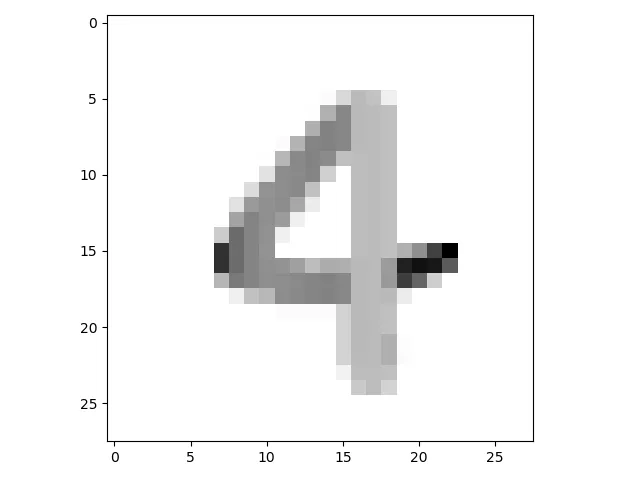

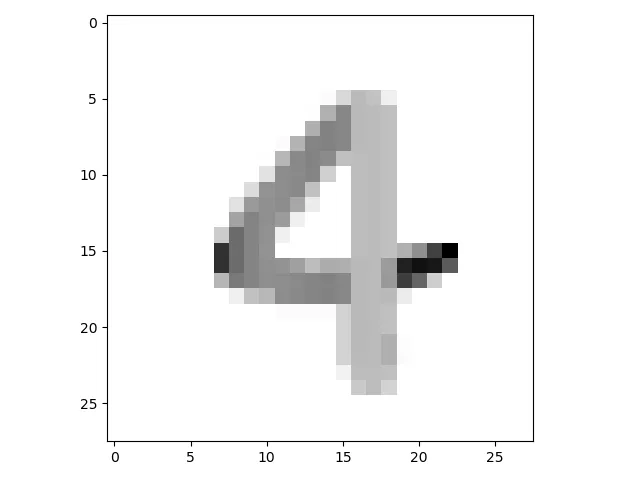

MINST提供了从0到9共10个灰度手绘数字的图像,每个数字约有7,000种不同风格的手写体,所以在MINST中共有70k种样本可供训练模型。我们取60k用于训练,10k用于测试。

每个图像都由一个$28\times 28$的矩阵组成,矩阵上的每个点的取值范围为$[0,255]$,用来表示不同的灰度,如图所示:

机器需要完成一个任务:通过对数万张图片的学习,获得识别图片的能力。

那么如何训练一个深度学习模型来解决这个问题呢?

神经网络的基本原理

线性回归

我们可以简单地描述识别一个图像的过程:观察一个图像,并将其本质输出

这就像是将一个实体联系到另一个实体上,即在两个实体间建立了一种映射关系。而最简单映射关系便是一种线性的关系了——通过一个参数$w$,和一个偏移$b$,我们可以建立这样一种线性的映射关系:

$$

Y=wX+b

$$

可以将$X$理解为图像(input data),$Y$则为识别结果(ground-truth)。

在真正的二元一次方程组中,我们通过给定的一组非线性相关的$(x,y)$便可以求出参数$w$和$b$的值,通过求出的参数值便可以对任意给出的$x$,得到精确的$y$值。

然而真实的数据往往不会那么理想,图像识别也不可能是简单的线性关系。因此对于上式我们引入噪声$\epsilon$的概念,令$\epsilon$服从标准正态分布,即:

$$

Y=wX+b+\epsilon, \quad \epsilon \sim N(0,1)

$$

此时我们便得到了一组线性无关的$(x,y)$ ,由于误差的增大,这些数据往往更接近于自然的数据。

如何评估参数的优劣呢?

在模型的训练过程中,$Y$往往是已知的,因此定义一个损失函数:

$$

loss=\sum_i{(w’x_i+b’-y_i)}^2

$$

损失函数反映了预测值$(w’x_i+b’)$与真实值$y_i$之间相接近的程度,从而把问题转化为了如何最小化损失函数。

梯度下降

在连续函数中,我们往往通过导数来确定一个极小值。

设$f(x)$为一个可导连续函数,则当$f(x)$为极小值时,往往需要同时满足以下条件:

$$

f’(x)=0

$$

$$

f’’(x)>0

$$

也就是说,对于该极小值点的附近,将存在以下特性:

$$

\lim_{\delta \to 0}f’(x+\delta)>0

$$

极小值点的右侧(正方向)的导数为正,应负向下降

$$

\lim_{\delta \to 0}f’(x-\delta)<0

$$

极小值点的左侧(负方向)的导数为负,应正向下降

综上所述,可以得出梯度下降的方向与导数的方向相反。

求解$w$和$b$使得损失函数最小化的过程,称为线性回归模型的最小二乘参数估计。

而对于数据而言,数据一般是离散的。因此引入一个衰减因子$lr$用来模拟变量的微分,并将损失函数$loss$分别对$w$和$b$求导,所得梯度下降函数为:

$$

w’=w-lr\times \frac{\partial loss}{\partial w}

$$

$$

\frac{\partial loss}{\partial w}=2(w\sum_i x_i^2-\sum_i (y_i-b)x_i)

$$

同理可得另一参数$b’$的梯度下降函数

$$

b’=b-lr\times \frac{\partial loss}{\partial b}

$$

$$

\frac{\partial loss}{\partial b}=2(\sum b-\sum_i (y_i-wx_i))

$$

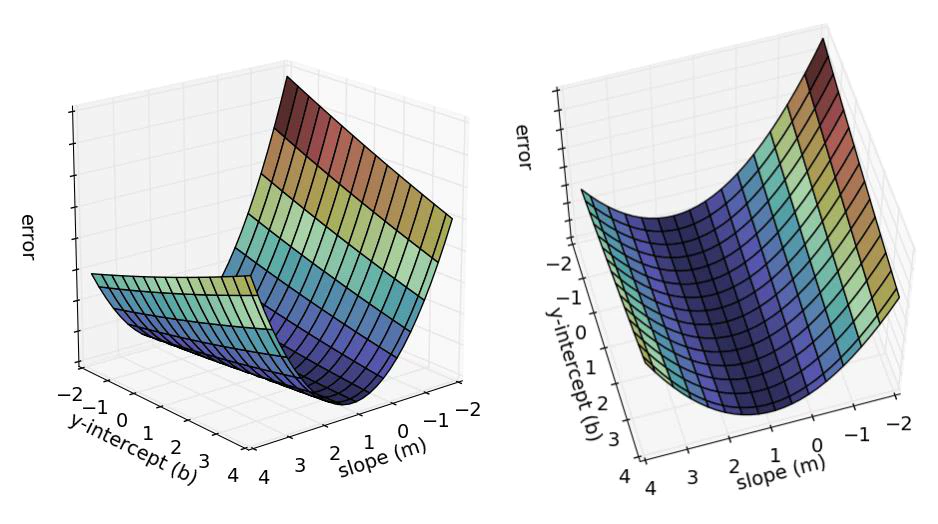

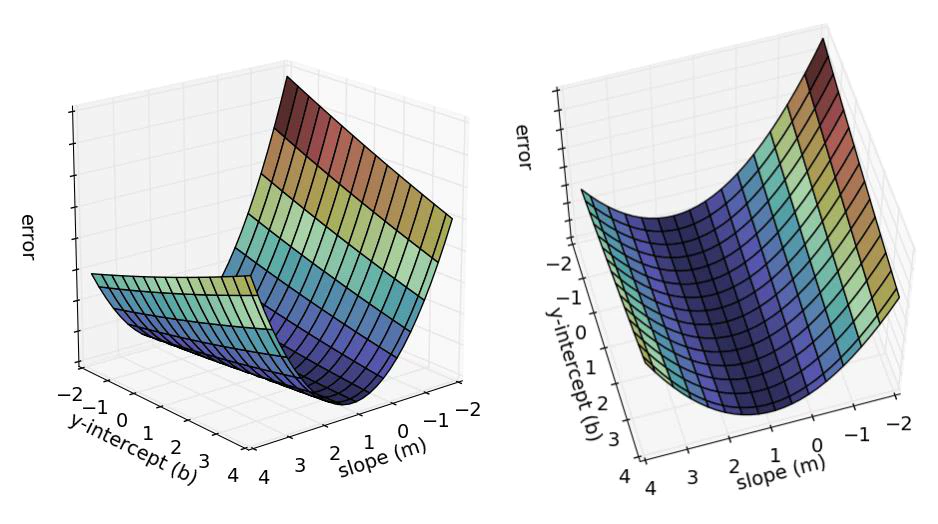

经过大量数据进行梯度下降,$w$和$b$会稳定在一个值,此时模型就得以确定了。如图所示,梯度下降往往会找到极小值,但却不一定找到最小值,只要极小值对于问题的解决是可以接受的,我们便可以认同极小值作为解。

对于更一般的情况,有$\mathbf w^T=(w_1,w_2,w_3,…,w_d)$作为向量$\mathbf x$的参数,形式如下:

$$

f(\mathbf x)=\mathbf w^T \mathbf x + b

$$

试图求得

$$

f(\mathbf x_i)=\mathbf w^T \mathbf x_i + b

$$

使得

$$

f(\mathbf x_i)\approx y_i

$$

即多元线性回归(multivariate linear regression)

此时,对于$28 \times 28$的矩阵$X$,我们是不是可以将他代入模型进行梯度下降来找到合适的参数呢?

多层网络

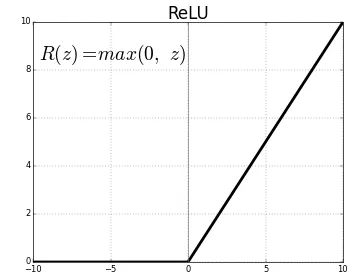

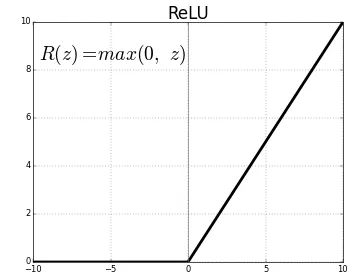

仅仅是一个线性回归显然无法满足我们对于图像识别的要求,因此在此处引入网络层的概念,每层即对应一次数据的优化。由于我们想要引入非线性因子,因此可以选择使用ReLU来作为激活函数,在反向传播时不易丢失梯度。

$$

ReLU(x) = max(0,x)

$$

首先需要将$28 \times 28$的矩阵$X$压成$1 \times 784$的$\mathbf x^T$,则$\mathbf x$就变成了一个高达784维的向量,对$\mathbf x$进行降维,即选取$N \times 784$的矩阵$W$作为$x$的参数,根据矩阵乘法,所得结果为一个N维向量。

如下过程展示了784 –> 512 –> 256 –> 10的降维:

- 𝑋=[𝑣1, 𝑣2, …, 𝑣784]

𝑋:[1, 784]

- ℎ1=𝒓𝒆𝒍𝒖(𝑋@𝑊1+𝑏1)

𝑊1: [784, 512]

𝑏1: [1, 512]

- ℎ2=𝒓𝒆𝒍𝒖(ℎ1@𝑊2+𝑏2)

𝑊2: [512, 256]

𝑏2: [1, 256]

- 𝑜𝑢𝑡=𝒓𝒆𝒍𝒖(ℎ2@𝑊3+𝑏3)

𝑊3: [256, 10]

𝑏3: [1, 10]

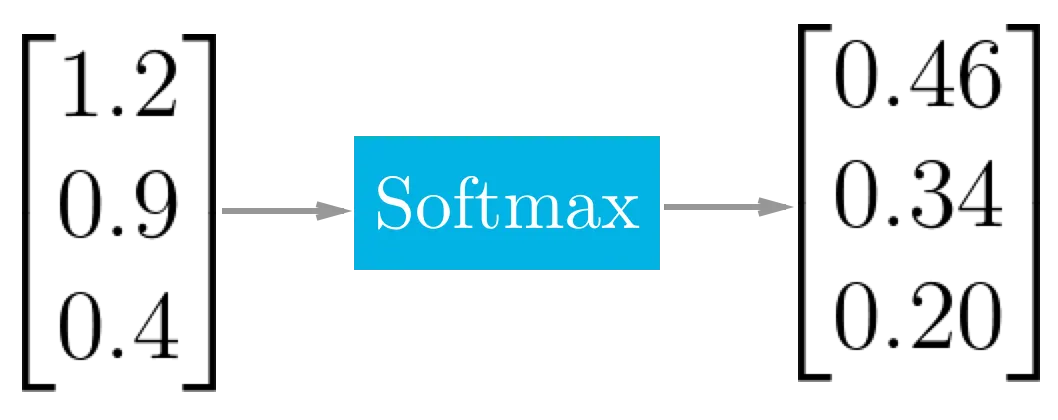

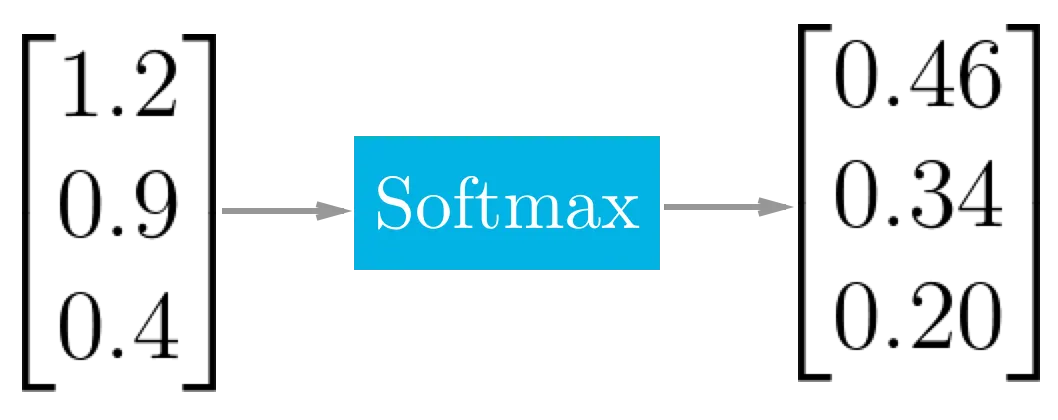

重复该过程,最终目的是得到一个10维的向量,通过softmax作为激活函数,最终映射结果即为对应0,1,2,…,9数字的预测概率值,最后选取置信度最高的结果作为预测结果。由于所有概率值的和是归一化的,因此这种方式称之为One-Hot。

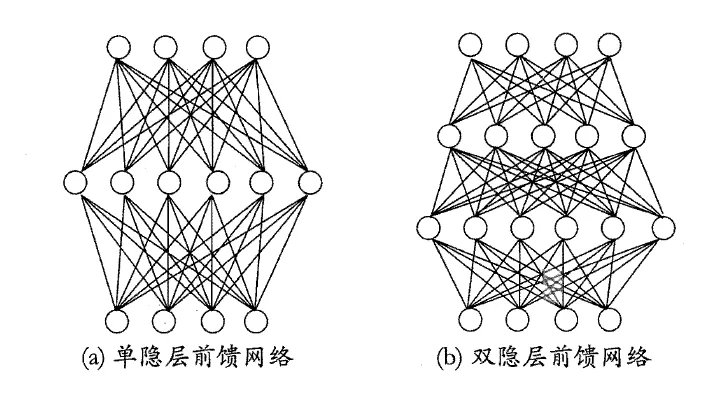

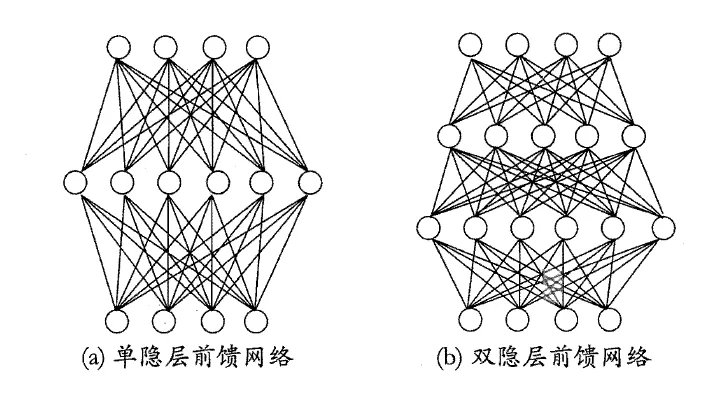

常见的多层网络是形如上图所示的层级结构,每层神经元与下一层神经元全互连,神经元之间不存在同层连接,也不存在跨层连接,这样的神经网络结构通常称为多层前馈神经网络(multi-layer feedforward neural networks) ,其中输入层神经元接收外界输入,隐层与输出层神经元对信号进行加工,最终结果由输出层神经元输出。换言之,输入层神经元仅是接受输入,不进行函数处理,隐层与输出层包含功能神经元。只需包含隐层,即可称为多层网络。

神经网络的学习过程,就是根据训练数据来调整神经元之间的连接权,以及每个功能神经元的阈值。

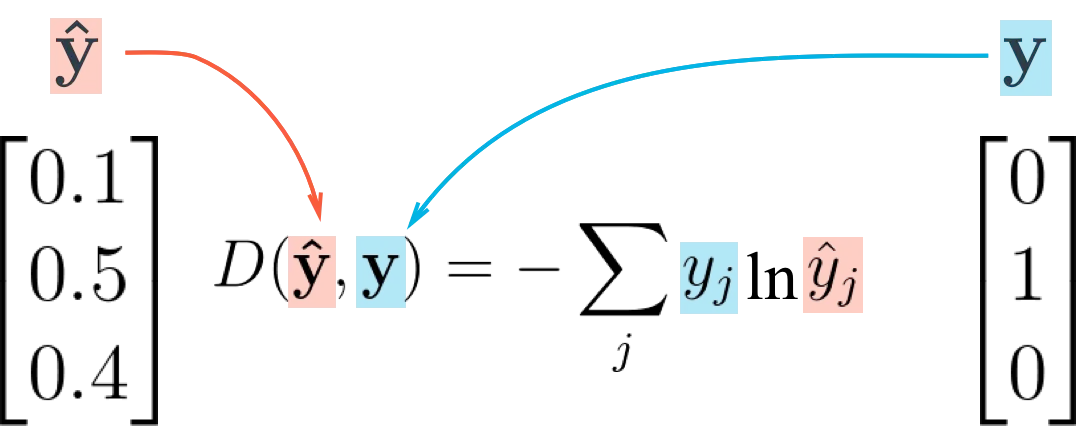

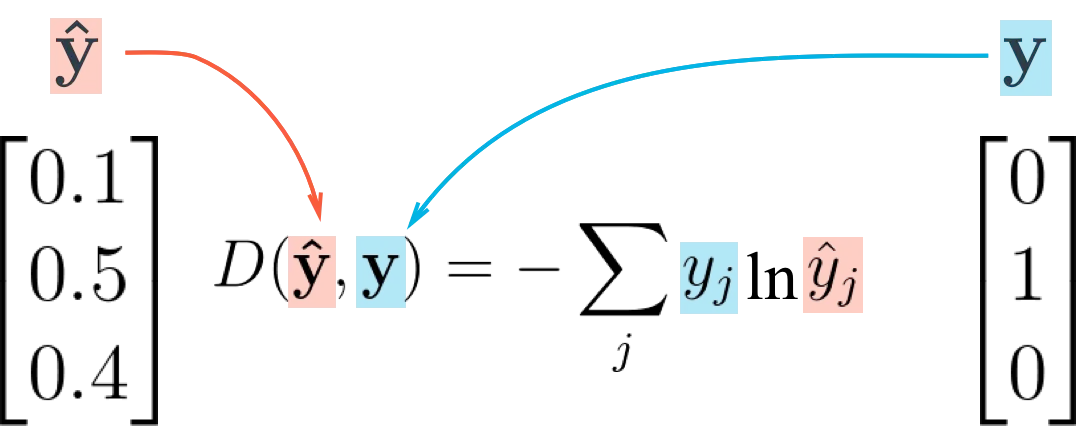

交叉熵

在线性回归问题中,经常会使用MSE作为损失函数,但MSE对于分类问题的作用比较有限。

因此这里我们引入交叉熵(Cross Entropy)来作为损失函数对参数进行估计。熵是信息量的期望值,它是一个随机变量的确定性的度量。熵越大,变量的取值越不确定;反之,熵越小,变量取值就越确定。

交叉熵可在神经网络中作为损失函数,$y$表示真实标记的分布,$\hat y$则为训练后的模型的预测标记分布。

交叉熵损失函数可以有效地衡量$y$与$ \hat y$的相似性。

测试环境

线性回归模型

通过构建不同的神经网络层,便可以得到想要的模型。

一个简单的线性回归模型网络层设计如下:

$$

\sigma (z_j)=\frac{e^{z_j} }{\sum _ {k=1}^K e^{z_j} },\quad j=1,2,…,K

$$

梯度下降优化算法选择了当前使用最广泛的Adam。

它结合了AdaGrad和Momentum的优点。Momentum可以看作是一个顺着斜坡滑下的球,而Adam的行为就像是一个带有摩擦的沉重的球——因此,它更喜欢在误差表面上保持平坦的最小值,在梯度优化算法中表现出十足的优势。

Source Code

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

| from tensorflow.keras import datasets, layers, optimizers, Sequential

(x_train, y_train), (x_test, y_test) = datasets.mnist.load_data("mnist.pkl")

x_train, x_test = x_train / 255.0, x_test / 255.0

model = Sequential([

layers.Flatten(input_shape=(28, 28)),

layers.Dense(128, activation='relu'),

layers.Dropout(0.2),

layers.Dense(10, activation='softmax')

])

model.compile(optimizer=optimizers.Adam(lr=1e-4),

loss='sparse_categorical_crossentropy',

metrics=['accuracy'])

model.fit(

x=x_train,

y=y_train,

batch_size=32,

epochs=100,

validation_data=(x_test, y_test)

)

|

Result

点击展开

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

43

44

45

46

47

48

49

50

51

52

53

54

55

56

57

58

59

60

61

62

63

64

65

66

67

68

69

70

71

72

73

74

75

76

77

78

79

80

81

82

83

84

85

86

87

88

89

90

91

92

93

94

95

96

97

98

99

100

101

102

103

104

105

106

107

108

109

110

111

112

113

114

115

116

117

118

119

120

121

122

123

124

125

126

127

128

129

130

131

132

133

134

135

136

137

138

139

140

141

142

143

144

145

146

147

148

149

150

151

152

153

154

155

156

157

158

159

160

161

162

163

164

165

166

167

168

169

170

171

172

173

174

175

176

177

178

179

180

181

182

183

184

185

186

187

188

189

190

191

192

193

194

195

196

197

198

199

200

| Epoch 1/100

1875/1875 [==============================] - 7s 3ms/step - loss: 1.0978 - accuracy: 0.6940 - val_loss: 0.3262 - val_accuracy: 0.9122

Epoch 2/100

1875/1875 [==============================] - 5s 3ms/step - loss: 0.3469 - accuracy: 0.9029 - val_loss: 0.2520 - val_accuracy: 0.9292

Epoch 3/100

1875/1875 [==============================] - 5s 3ms/step - loss: 0.2763 - accuracy: 0.9208 - val_loss: 0.2127 - val_accuracy: 0.9407

Epoch 4/100

1875/1875 [==============================] - 5s 3ms/step - loss: 0.2322 - accuracy: 0.9338 - val_loss: 0.1852 - val_accuracy: 0.9473

Epoch 5/100

1875/1875 [==============================] - 5s 3ms/step - loss: 0.2066 - accuracy: 0.9405 - val_loss: 0.1656 - val_accuracy: 0.9528

Epoch 6/100

1875/1875 [==============================] - 5s 3ms/step - loss: 0.1843 - accuracy: 0.9478 - val_loss: 0.1484 - val_accuracy: 0.9574

Epoch 7/100

1875/1875 [==============================] - 5s 3ms/step - loss: 0.1670 - accuracy: 0.9520 - val_loss: 0.1376 - val_accuracy: 0.9601

Epoch 8/100

1875/1875 [==============================] - 5s 3ms/step - loss: 0.1534 - accuracy: 0.9557 - val_loss: 0.1290 - val_accuracy: 0.9636

Epoch 9/100

1875/1875 [==============================] - 5s 3ms/step - loss: 0.1417 - accuracy: 0.9590 - val_loss: 0.1197 - val_accuracy: 0.9659

Epoch 10/100

1875/1875 [==============================] - 5s 3ms/step - loss: 0.1305 - accuracy: 0.9625 - val_loss: 0.1132 - val_accuracy: 0.9670

Epoch 11/100

1875/1875 [==============================] - 5s 3ms/step - loss: 0.1222 - accuracy: 0.9653 - val_loss: 0.1091 - val_accuracy: 0.9689

Epoch 12/100

1875/1875 [==============================] - 5s 3ms/step - loss: 0.1136 - accuracy: 0.9680 - val_loss: 0.1041 - val_accuracy: 0.9698

Epoch 13/100

1875/1875 [==============================] - 5s 3ms/step - loss: 0.1067 - accuracy: 0.9688 - val_loss: 0.0994 - val_accuracy: 0.9707

Epoch 14/100

1875/1875 [==============================] - 5s 3ms/step - loss: 0.1062 - accuracy: 0.9692 - val_loss: 0.0960 - val_accuracy: 0.9721

Epoch 15/100

1875/1875 [==============================] - 5s 3ms/step - loss: 0.1018 - accuracy: 0.9701 - val_loss: 0.0932 - val_accuracy: 0.9734

Epoch 16/100

1875/1875 [==============================] - 5s 3ms/step - loss: 0.0948 - accuracy: 0.9731 - val_loss: 0.0905 - val_accuracy: 0.9737

Epoch 17/100

1875/1875 [==============================] - 5s 3ms/step - loss: 0.0906 - accuracy: 0.9729 - val_loss: 0.0884 - val_accuracy: 0.9743

Epoch 18/100

1875/1875 [==============================] - 5s 3ms/step - loss: 0.0869 - accuracy: 0.9752 - val_loss: 0.0863 - val_accuracy: 0.9748

Epoch 19/100

1875/1875 [==============================] - 5s 3ms/step - loss: 0.0850 - accuracy: 0.9745 - val_loss: 0.0853 - val_accuracy: 0.9747

Epoch 20/100

1875/1875 [==============================] - 5s 3ms/step - loss: 0.0845 - accuracy: 0.9751 - val_loss: 0.0814 - val_accuracy: 0.9765

Epoch 21/100

1875/1875 [==============================] - 5s 3ms/step - loss: 0.0763 - accuracy: 0.9774 - val_loss: 0.0815 - val_accuracy: 0.9765

Epoch 22/100

1875/1875 [==============================] - 5s 3ms/step - loss: 0.0756 - accuracy: 0.9777 - val_loss: 0.0795 - val_accuracy: 0.9768

Epoch 23/100

1875/1875 [==============================] - 5s 3ms/step - loss: 0.0708 - accuracy: 0.9786 - val_loss: 0.0782 - val_accuracy: 0.9771

Epoch 24/100

1875/1875 [==============================] - 5s 2ms/step - loss: 0.0706 - accuracy: 0.9794 - val_loss: 0.0776 - val_accuracy: 0.9768

Epoch 25/100

1875/1875 [==============================] - 5s 3ms/step - loss: 0.0688 - accuracy: 0.9792 - val_loss: 0.0754 - val_accuracy: 0.9773

Epoch 26/100

1875/1875 [==============================] - 5s 3ms/step - loss: 0.0656 - accuracy: 0.9807 - val_loss: 0.0740 - val_accuracy: 0.9779

Epoch 27/100

1875/1875 [==============================] - 5s 3ms/step - loss: 0.0614 - accuracy: 0.9818 - val_loss: 0.0746 - val_accuracy: 0.9779

Epoch 28/100

1875/1875 [==============================] - 5s 3ms/step - loss: 0.0587 - accuracy: 0.9832 - val_loss: 0.0738 - val_accuracy: 0.9777

Epoch 29/100

1875/1875 [==============================] - 5s 3ms/step - loss: 0.0591 - accuracy: 0.9823 - val_loss: 0.0722 - val_accuracy: 0.9784

Epoch 30/100

1875/1875 [==============================] - 5s 3ms/step - loss: 0.0562 - accuracy: 0.9825 - val_loss: 0.0736 - val_accuracy: 0.9776

Epoch 31/100

1875/1875 [==============================] - 5s 3ms/step - loss: 0.0550 - accuracy: 0.9840 - val_loss: 0.0708 - val_accuracy: 0.9783

Epoch 32/100

1875/1875 [==============================] - 5s 3ms/step - loss: 0.0545 - accuracy: 0.9840 - val_loss: 0.0712 - val_accuracy: 0.9787

Epoch 33/100

1875/1875 [==============================] - 5s 3ms/step - loss: 0.0518 - accuracy: 0.9850 - val_loss: 0.0714 - val_accuracy: 0.9790

Epoch 34/100

1875/1875 [==============================] - 5s 3ms/step - loss: 0.0504 - accuracy: 0.9855 - val_loss: 0.0699 - val_accuracy: 0.9795

Epoch 35/100

1875/1875 [==============================] - 5s 3ms/step - loss: 0.0500 - accuracy: 0.9857 - val_loss: 0.0710 - val_accuracy: 0.9789

Epoch 36/100

1875/1875 [==============================] - 5s 3ms/step - loss: 0.0488 - accuracy: 0.9853 - val_loss: 0.0696 - val_accuracy: 0.9789

Epoch 37/100

1875/1875 [==============================] - 5s 3ms/step - loss: 0.0450 - accuracy: 0.9867 - val_loss: 0.0700 - val_accuracy: 0.9784

Epoch 38/100

1875/1875 [==============================] - 5s 3ms/step - loss: 0.0441 - accuracy: 0.9875 - val_loss: 0.0706 - val_accuracy: 0.9792

Epoch 39/100

1875/1875 [==============================] - 5s 3ms/step - loss: 0.0445 - accuracy: 0.9876 - val_loss: 0.0701 - val_accuracy: 0.9787

Epoch 40/100

1875/1875 [==============================] - 5s 3ms/step - loss: 0.0440 - accuracy: 0.9867 - val_loss: 0.0688 - val_accuracy: 0.9792

Epoch 41/100

1875/1875 [==============================] - 5s 3ms/step - loss: 0.0439 - accuracy: 0.9873 - val_loss: 0.0693 - val_accuracy: 0.9788

Epoch 42/100

1875/1875 [==============================] - 5s 3ms/step - loss: 0.0407 - accuracy: 0.9886 - val_loss: 0.0698 - val_accuracy: 0.9792

Epoch 43/100

1875/1875 [==============================] - 5s 3ms/step - loss: 0.0398 - accuracy: 0.9883 - val_loss: 0.0694 - val_accuracy: 0.9793

Epoch 44/100

1875/1875 [==============================] - 5s 3ms/step - loss: 0.0401 - accuracy: 0.9880 - val_loss: 0.0687 - val_accuracy: 0.9795

Epoch 45/100

1875/1875 [==============================] - 5s 3ms/step - loss: 0.0393 - accuracy: 0.9878 - val_loss: 0.0684 - val_accuracy: 0.9790

Epoch 46/100

1875/1875 [==============================] - 5s 3ms/step - loss: 0.0381 - accuracy: 0.9885 - val_loss: 0.0680 - val_accuracy: 0.9796

Epoch 47/100

1875/1875 [==============================] - 5s 3ms/step - loss: 0.0384 - accuracy: 0.9885 - val_loss: 0.0687 - val_accuracy: 0.9796

Epoch 48/100

1875/1875 [==============================] - 5s 3ms/step - loss: 0.0370 - accuracy: 0.9888 - val_loss: 0.0676 - val_accuracy: 0.9789

Epoch 49/100

1875/1875 [==============================] - 5s 3ms/step - loss: 0.0358 - accuracy: 0.9900 - val_loss: 0.0686 - val_accuracy: 0.9793

Epoch 50/100

1875/1875 [==============================] - 5s 3ms/step - loss: 0.0363 - accuracy: 0.9890 - val_loss: 0.0688 - val_accuracy: 0.9790

Epoch 51/100

1875/1875 [==============================] - 5s 3ms/step - loss: 0.0329 - accuracy: 0.9910 - val_loss: 0.0691 - val_accuracy: 0.9789

Epoch 52/100

1875/1875 [==============================] - 5s 3ms/step - loss: 0.0342 - accuracy: 0.9901 - val_loss: 0.0678 - val_accuracy: 0.9795

Epoch 53/100

1875/1875 [==============================] - 5s 3ms/step - loss: 0.0319 - accuracy: 0.9907 - val_loss: 0.0691 - val_accuracy: 0.9794

Epoch 54/100

1875/1875 [==============================] - 5s 3ms/step - loss: 0.0318 - accuracy: 0.9903 - val_loss: 0.0699 - val_accuracy: 0.9793

Epoch 55/100

1875/1875 [==============================] - 5s 3ms/step - loss: 0.0294 - accuracy: 0.9912 - val_loss: 0.0688 - val_accuracy: 0.9794

Epoch 56/100

1875/1875 [==============================] - 5s 3ms/step - loss: 0.0291 - accuracy: 0.9916 - val_loss: 0.0688 - val_accuracy: 0.9787

Epoch 57/100

1875/1875 [==============================] - 5s 3ms/step - loss: 0.0305 - accuracy: 0.9913 - val_loss: 0.0698 - val_accuracy: 0.9797

Epoch 58/100

1875/1875 [==============================] - 5s 3ms/step - loss: 0.0293 - accuracy: 0.9916 - val_loss: 0.0686 - val_accuracy: 0.9794

Epoch 59/100

1875/1875 [==============================] - 5s 3ms/step - loss: 0.0286 - accuracy: 0.9915 - val_loss: 0.0696 - val_accuracy: 0.9794

Epoch 60/100

1875/1875 [==============================] - 5s 3ms/step - loss: 0.0279 - accuracy: 0.9919 - val_loss: 0.0688 - val_accuracy: 0.9796

Epoch 61/100

1875/1875 [==============================] - 5s 3ms/step - loss: 0.0275 - accuracy: 0.9924 - val_loss: 0.0679 - val_accuracy: 0.9799

Epoch 62/100

1875/1875 [==============================] - 5s 3ms/step - loss: 0.0271 - accuracy: 0.9918 - val_loss: 0.0707 - val_accuracy: 0.9798

Epoch 63/100

1875/1875 [==============================] - 5s 3ms/step - loss: 0.0249 - accuracy: 0.9933 - val_loss: 0.0721 - val_accuracy: 0.9797

Epoch 64/100

1875/1875 [==============================] - 5s 3ms/step - loss: 0.0241 - accuracy: 0.9934 - val_loss: 0.0701 - val_accuracy: 0.9795

Epoch 65/100

1875/1875 [==============================] - 5s 3ms/step - loss: 0.0245 - accuracy: 0.9927 - val_loss: 0.0713 - val_accuracy: 0.9792

Epoch 66/100

1875/1875 [==============================] - 5s 3ms/step - loss: 0.0249 - accuracy: 0.9924 - val_loss: 0.0708 - val_accuracy: 0.9795

Epoch 67/100

1875/1875 [==============================] - 5s 2ms/step - loss: 0.0238 - accuracy: 0.9938 - val_loss: 0.0723 - val_accuracy: 0.9793

Epoch 68/100

1875/1875 [==============================] - 5s 3ms/step - loss: 0.0247 - accuracy: 0.9926 - val_loss: 0.0713 - val_accuracy: 0.9796

Epoch 69/100

1875/1875 [==============================] - 5s 3ms/step - loss: 0.0240 - accuracy: 0.9931 - val_loss: 0.0727 - val_accuracy: 0.9792

Epoch 70/100

1875/1875 [==============================] - 5s 3ms/step - loss: 0.0233 - accuracy: 0.9930 - val_loss: 0.0719 - val_accuracy: 0.9791

Epoch 71/100

1875/1875 [==============================] - 5s 3ms/step - loss: 0.0241 - accuracy: 0.9927 - val_loss: 0.0720 - val_accuracy: 0.9798

Epoch 72/100

1875/1875 [==============================] - 5s 3ms/step - loss: 0.0231 - accuracy: 0.9931 - val_loss: 0.0725 - val_accuracy: 0.9797

Epoch 73/100

1875/1875 [==============================] - 5s 2ms/step - loss: 0.0225 - accuracy: 0.9934 - val_loss: 0.0730 - val_accuracy: 0.9798

Epoch 74/100

1875/1875 [==============================] - 5s 3ms/step - loss: 0.0218 - accuracy: 0.9933 - val_loss: 0.0727 - val_accuracy: 0.9800

Epoch 75/100

1875/1875 [==============================] - 5s 3ms/step - loss: 0.0220 - accuracy: 0.9932 - val_loss: 0.0739 - val_accuracy: 0.9792

Epoch 76/100

1875/1875 [==============================] - 5s 3ms/step - loss: 0.0214 - accuracy: 0.9937 - val_loss: 0.0742 - val_accuracy: 0.9794

Epoch 77/100

1875/1875 [==============================] - 5s 3ms/step - loss: 0.0203 - accuracy: 0.9943 - val_loss: 0.0742 - val_accuracy: 0.9797

Epoch 78/100

1875/1875 [==============================] - 5s 3ms/step - loss: 0.0201 - accuracy: 0.9940 - val_loss: 0.0736 - val_accuracy: 0.9798

Epoch 79/100

1875/1875 [==============================] - 5s 3ms/step - loss: 0.0182 - accuracy: 0.9952 - val_loss: 0.0749 - val_accuracy: 0.9793

Epoch 80/100

1875/1875 [==============================] - 5s 3ms/step - loss: 0.0186 - accuracy: 0.9944 - val_loss: 0.0731 - val_accuracy: 0.9800

Epoch 81/100

1875/1875 [==============================] - 6s 3ms/step - loss: 0.0198 - accuracy: 0.9946 - val_loss: 0.0739 - val_accuracy: 0.9795

Epoch 82/100

1875/1875 [==============================] - 6s 3ms/step - loss: 0.0179 - accuracy: 0.9952 - val_loss: 0.0741 - val_accuracy: 0.9797

Epoch 83/100

1875/1875 [==============================] - 5s 3ms/step - loss: 0.0189 - accuracy: 0.9944 - val_loss: 0.0730 - val_accuracy: 0.9800

Epoch 84/100

1875/1875 [==============================] - 5s 3ms/step - loss: 0.0174 - accuracy: 0.9950 - val_loss: 0.0771 - val_accuracy: 0.9798

Epoch 85/100

1875/1875 [==============================] - 5s 3ms/step - loss: 0.0174 - accuracy: 0.9952 - val_loss: 0.0756 - val_accuracy: 0.9797

Epoch 86/100

1875/1875 [==============================] - 5s 3ms/step - loss: 0.0180 - accuracy: 0.9947 - val_loss: 0.0760 - val_accuracy: 0.9798

Epoch 87/100

1875/1875 [==============================] - 5s 3ms/step - loss: 0.0170 - accuracy: 0.9950 - val_loss: 0.0754 - val_accuracy: 0.9798

Epoch 88/100

1875/1875 [==============================] - 5s 3ms/step - loss: 0.0166 - accuracy: 0.9955 - val_loss: 0.0762 - val_accuracy: 0.9800

Epoch 89/100

1875/1875 [==============================] - 5s 3ms/step - loss: 0.0185 - accuracy: 0.9946 - val_loss: 0.0753 - val_accuracy: 0.9799

Epoch 90/100

1875/1875 [==============================] - 5s 3ms/step - loss: 0.0162 - accuracy: 0.9957 - val_loss: 0.0758 - val_accuracy: 0.9800

Epoch 91/100

1875/1875 [==============================] - 5s 3ms/step - loss: 0.0163 - accuracy: 0.9956 - val_loss: 0.0773 - val_accuracy: 0.9799

Epoch 92/100

1875/1875 [==============================] - 5s 2ms/step - loss: 0.0159 - accuracy: 0.9958 - val_loss: 0.0761 - val_accuracy: 0.9798

Epoch 93/100

1875/1875 [==============================] - 5s 3ms/step - loss: 0.0164 - accuracy: 0.9949 - val_loss: 0.0768 - val_accuracy: 0.9795

Epoch 94/100

1875/1875 [==============================] - 5s 3ms/step - loss: 0.0155 - accuracy: 0.9960 - val_loss: 0.0766 - val_accuracy: 0.9794

Epoch 95/100

1875/1875 [==============================] - 5s 3ms/step - loss: 0.0164 - accuracy: 0.9948 - val_loss: 0.0778 - val_accuracy: 0.9802

Epoch 96/100

1875/1875 [==============================] - 5s 3ms/step - loss: 0.0148 - accuracy: 0.9954 - val_loss: 0.0767 - val_accuracy: 0.9802

Epoch 97/100

1875/1875 [==============================] - 5s 3ms/step - loss: 0.0153 - accuracy: 0.9958 - val_loss: 0.0778 - val_accuracy: 0.9797

Epoch 98/100

1875/1875 [==============================] - 5s 3ms/step - loss: 0.0139 - accuracy: 0.9959 - val_loss: 0.0786 - val_accuracy: 0.9790

Epoch 99/100

1875/1875 [==============================] - 5s 3ms/step - loss: 0.0144 - accuracy: 0.9957 - val_loss: 0.0783 - val_accuracy: 0.9791

Epoch 100/100

1875/1875 [==============================] - 5s 3ms/step - loss: 0.0142 - accuracy: 0.9954 - val_loss: 0.0767 - val_accuracy: 0.9806

|

对10000个样本进行测试,结果获得了最高98.06%的准确率,已经足够用于手写数字识别了。但能否获得更高的准确率呢?

要解决这个问题,需要在模型中引入卷积神经网络。

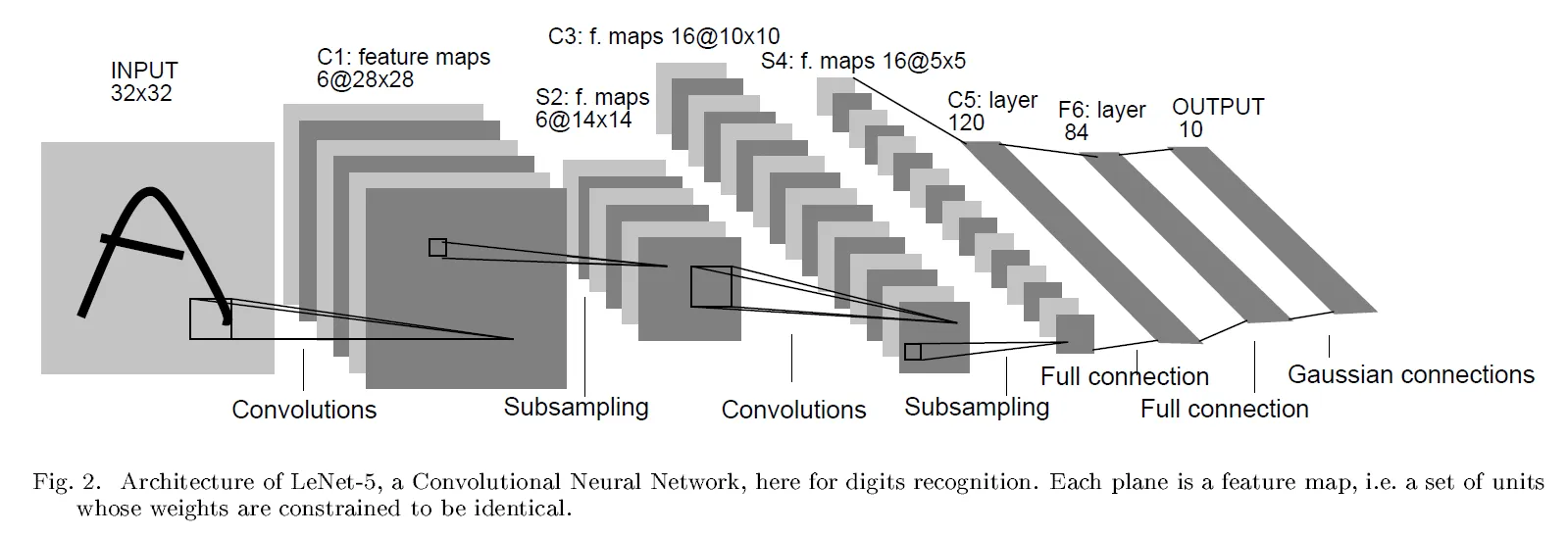

卷积神经网络模型

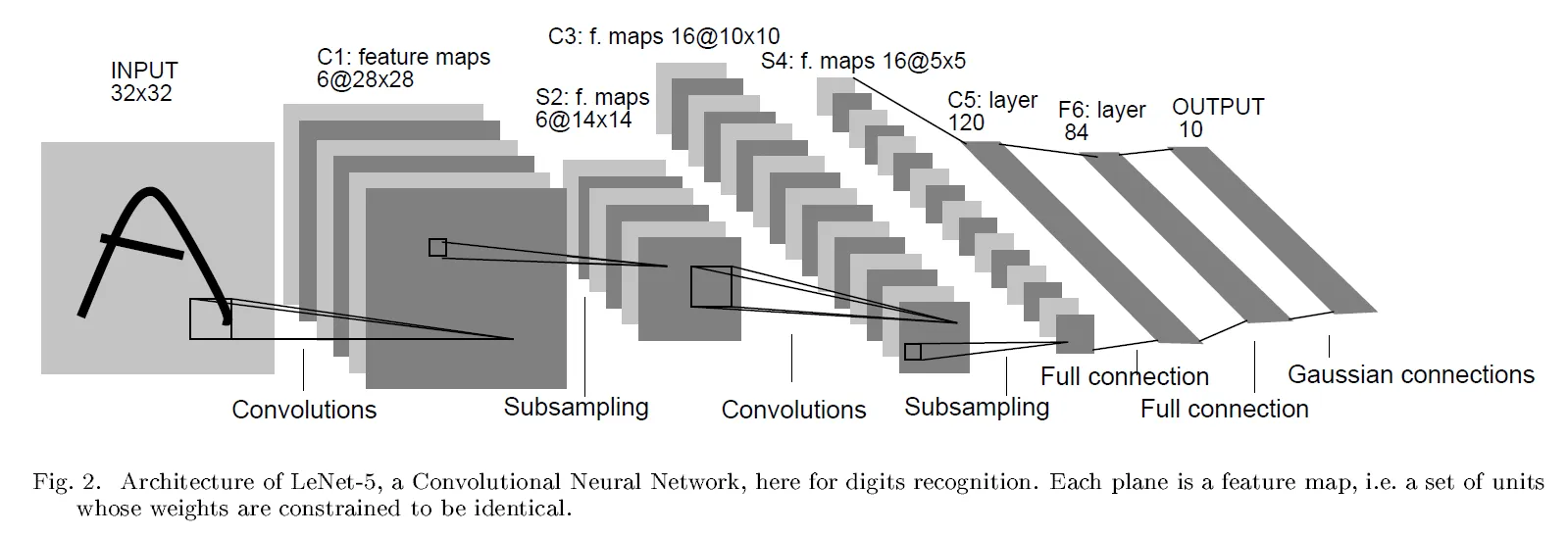

卷积神经网络(Convolutional Neural Networks, CNN)是一类包含卷积计算且具有深度结构的前馈神经网络(Feedforward Neural Networks),是深度学习(deep learning)的代表算法之一 。卷积神经网络具有表征学习(representation learning)能力,能够按其阶层结构对输入信息进行平移不变分类(shift-invariant classification),因此也被称为“平移不变人工神经网络(Shift-Invariant Artificial Neural Networks, SIANN)” 。

上图为经典的神经网络模型LeNet-5的结构示意图,网络输入是一个$32 \times 32$ 的手写数字图像,输出是其识别结果, CNN 复合多个”卷积层”和”采样层”对输入信号进行加工,然后在连接层实现与输出目标之间的映射。

卷积层

卷积神经网络中每层卷积层由若干卷积单元组成,每个卷积单元的参数都是通过反向传播算法最佳化得到的。卷积运算的目的是提取输入的不同特征,第一层卷积层可能只能提取一些低级的特征如边缘、线条和角等层级,更多层的网路能从低级特征中迭代提取更复杂的特征。

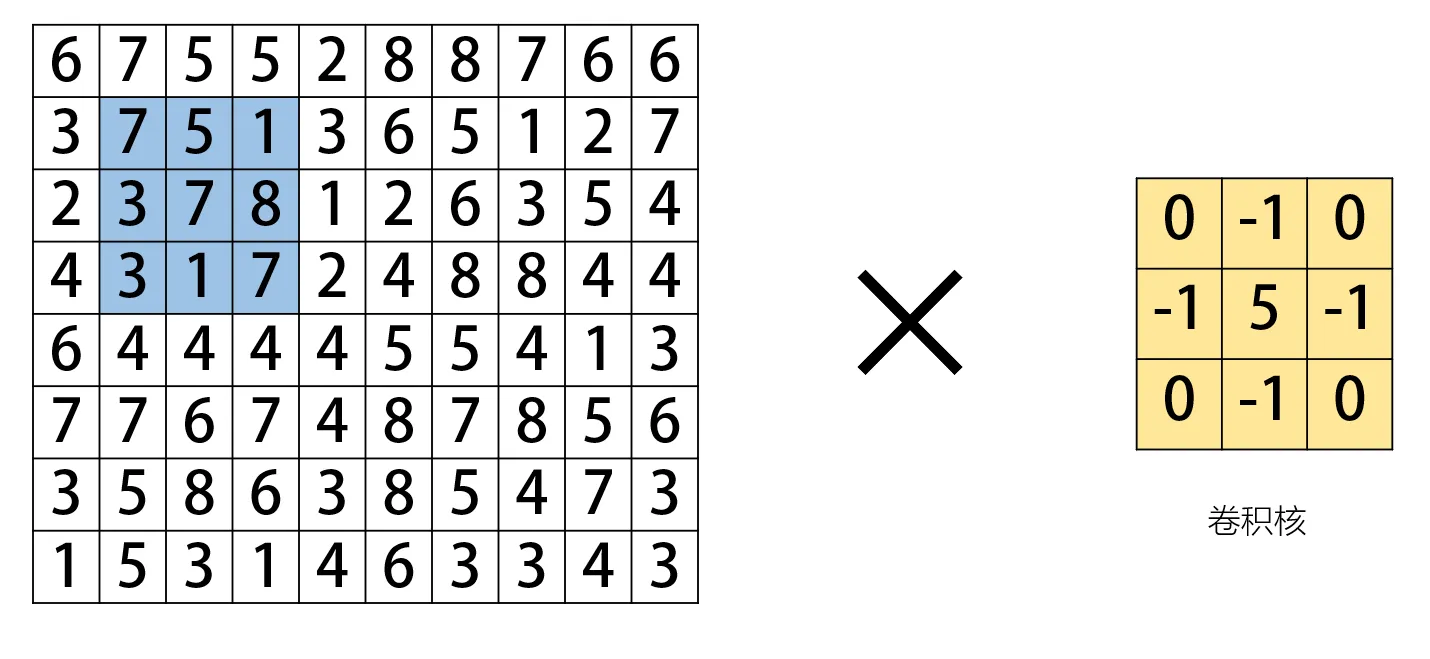

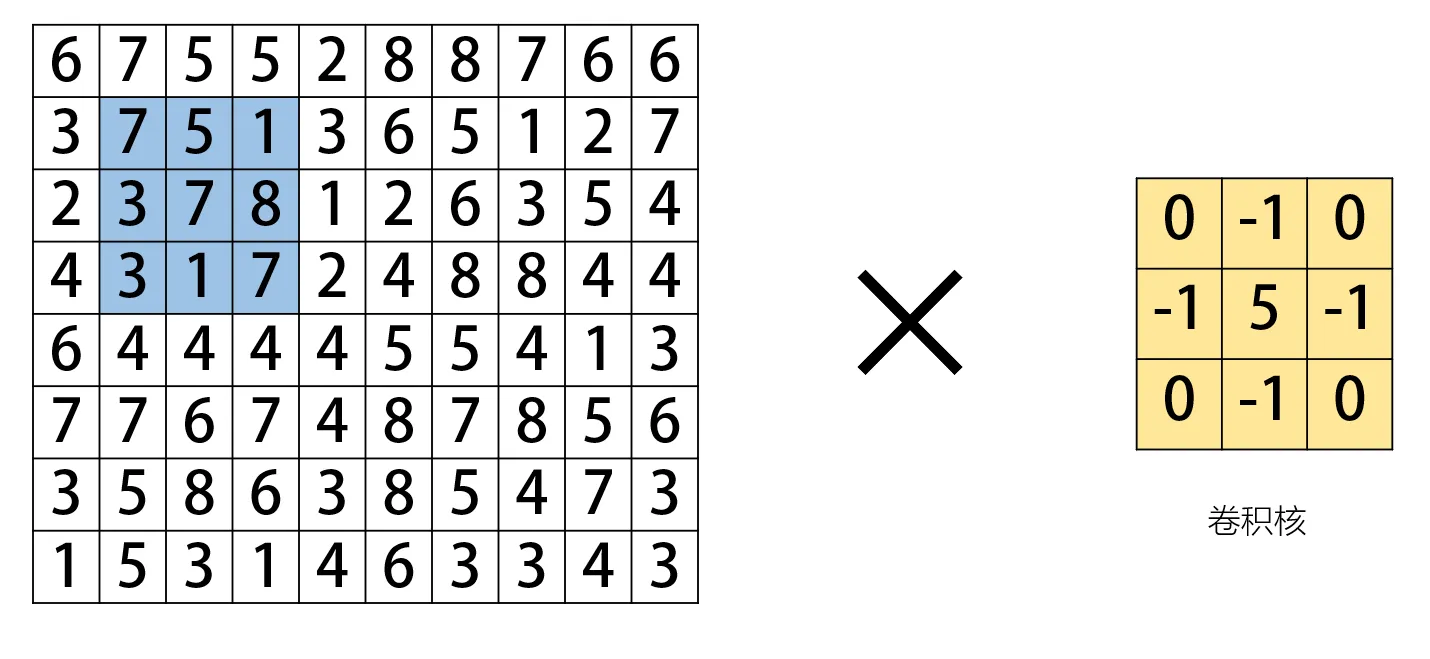

每个卷积层都包含多个特征映射(feature map), 每个特征映射是一个由多个神经元构成的“平面”,通过卷积过滤器提取输入的一种特征。你可以将每个过滤器描绘成一个窗口,该窗口在图像的尺寸上滑动并检测属性。窗口停止在一个位置后,根据过滤器与卷积核进行卷积运算,如图所示:

上图计算式即:

$$

(-1 \times 5)+(-1 \times 3)+(-1 \times 1)+(-1 \times 8)+(5 \times 7) = 18

$$

随后滤镜在图像上进行滑动,每次移动的像素数量称为步幅。步幅为1意味着滤波器一次移动一个像素,其中2的步幅将向前跳过2个像素。 移动到新位置后再次进行卷积计算,如此便完成了一个卷积层。

池化层

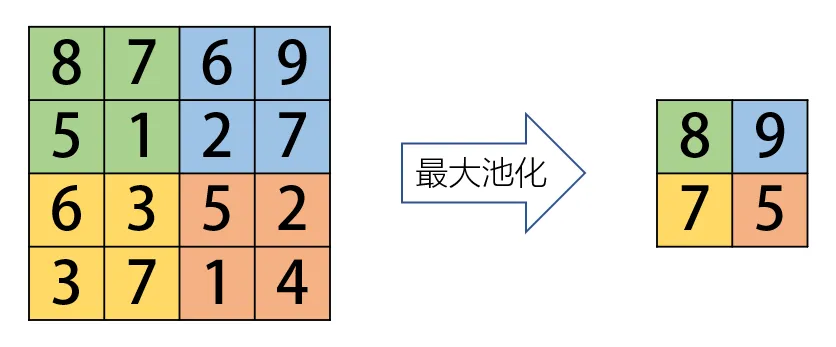

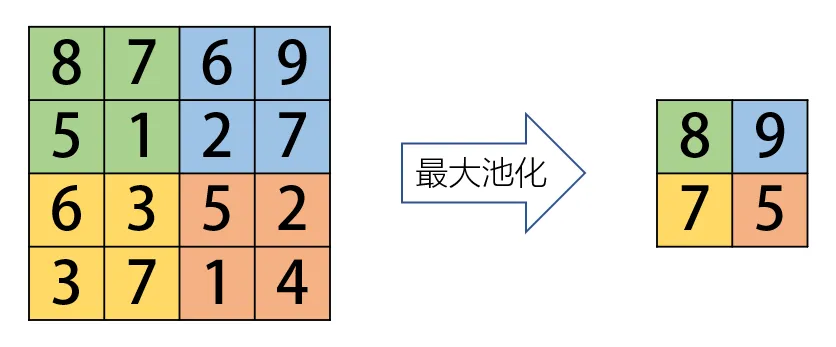

池化层(pooling)又称为采样层,其作用是基于局部相关性原理进行亚采样,从而在减少数据量的同时保留有用信息。池化层的目标是通过聚合卷积层收集的值或所谓的子采样来进一步降低维度。除了为模型提供一些正则化的方案以避免过度拟合之外,这还将减少计算量。它们遵循与卷积层相同的滑动窗口思想,但不是计算所有值,而是选择其输入的最大值或平均值。这分别称为最大池化和平均池化。

重复此方法可以进一步减少特征图的尺寸,但会增加其深度。每个特征图将专门识别它自己独特的形状。

在卷积结束时,放置一个完全连接的图层,其具有激活功能,例如Relu或Selu,用于将尺寸重新整形为适合的尺寸送入分类器。

Source Code

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

43

44

45

46

47

48

49

50

51

52

53

54

| from tensorflow.keras import datasets, layers, optimizers, Sequential

(x_train, y_train), (x_test, y_test) = datasets.mnist.load_data("mnist.pkl")

x_train, x_test = x_train / 255.0, x_test / 255.0

model = Sequential([

layers.InputLayer(input_shape=(28, 28)),

layers.Reshape((28, 28, 1)),

layers.Conv2D(kernel_size=3, strides=1, filters=16, padding='same'),

layers.BatchNormalization(),

layers.ReLU(),

layers.MaxPooling2D(pool_size=2, strides=2),

layers.Conv2D(kernel_size=3, strides=1, filters=32, padding='same'),

layers.BatchNormalization(),

layers.ReLU(),

layers.MaxPooling2D(pool_size=2, strides=2),

layers.Flatten(),

layers.Dense(units=128),

layers.BatchNormalization(),

layers.ReLU(),

layers.Dense(units=10, activation='softmax')

])

model.compile(

optimizer=optimizers.Adam(lr=1e-4),

loss='sparse_categorical_crossentropy',

metrics=['accuracy']

)

model.fit(

x=x_train,

y=y_train,

epochs=100,

batch_size=32,

validation_data=(x_test, y_test)

)

|

Result

点击展开

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

43

44

45

46

47

48

49

50

51

52

53

54

55

56

57

58

59

60

61

62

63

64

65

66

67

68

69

70

71

72

73

74

75

76

77

78

79

80

81

82

83

84

85

86

87

88

89

90

91

92

93

94

95

96

97

98

99

100

101

102

103

104

105

106

107

108

109

110

111

112

113

114

115

116

117

118

119

120

121

122

123

124

125

126

127

128

129

130

131

132

133

134

135

136

137

138

139

140

141

142

143

144

145

146

147

148

149

150

151

152

153

154

155

156

157

158

159

160

161

162

163

164

165

166

167

168

169

170

171

172

173

174

175

176

177

178

179

180

181

182

183

184

185

186

187

188

189

190

191

192

193

194

195

196

197

198

199

200

| Epoch 1/100

1875/1875 [==============================] - 15s 7ms/step - loss: 0.5233 - accuracy: 0.8596 - val_loss: 0.0788 - val_accuracy: 0.9817

Epoch 2/100

1875/1875 [==============================] - 11s 6ms/step - loss: 0.0897 - accuracy: 0.9779 - val_loss: 0.0498 - val_accuracy: 0.9864

Epoch 3/100

1875/1875 [==============================] - 11s 6ms/step - loss: 0.0555 - accuracy: 0.9861 - val_loss: 0.0389 - val_accuracy: 0.9891

Epoch 4/100

1875/1875 [==============================] - 11s 6ms/step - loss: 0.0431 - accuracy: 0.9885 - val_loss: 0.0363 - val_accuracy: 0.9890

Epoch 5/100

1875/1875 [==============================] - 11s 6ms/step - loss: 0.0317 - accuracy: 0.9917 - val_loss: 0.0329 - val_accuracy: 0.9899

Epoch 6/100

1875/1875 [==============================] - 11s 6ms/step - loss: 0.0250 - accuracy: 0.9935 - val_loss: 0.0301 - val_accuracy: 0.9905

Epoch 7/100

1875/1875 [==============================] - 11s 6ms/step - loss: 0.0196 - accuracy: 0.9953 - val_loss: 0.0297 - val_accuracy: 0.9900

Epoch 8/100

1875/1875 [==============================] - 11s 6ms/step - loss: 0.0171 - accuracy: 0.9958 - val_loss: 0.0287 - val_accuracy: 0.9905

Epoch 9/100

1875/1875 [==============================] - 11s 6ms/step - loss: 0.0127 - accuracy: 0.9974 - val_loss: 0.0295 - val_accuracy: 0.9909

Epoch 10/100

1875/1875 [==============================] - 11s 6ms/step - loss: 0.0107 - accuracy: 0.9976 - val_loss: 0.0300 - val_accuracy: 0.9906

Epoch 11/100

1875/1875 [==============================] - 11s 6ms/step - loss: 0.0096 - accuracy: 0.9981 - val_loss: 0.0298 - val_accuracy: 0.9907

Epoch 12/100

1875/1875 [==============================] - 11s 6ms/step - loss: 0.0079 - accuracy: 0.9984 - val_loss: 0.0311 - val_accuracy: 0.9905

Epoch 13/100

1875/1875 [==============================] - 11s 6ms/step - loss: 0.0067 - accuracy: 0.9988 - val_loss: 0.0290 - val_accuracy: 0.9912

Epoch 14/100

1875/1875 [==============================] - 12s 6ms/step - loss: 0.0062 - accuracy: 0.9989 - val_loss: 0.0284 - val_accuracy: 0.9913

Epoch 15/100

1875/1875 [==============================] - 11s 6ms/step - loss: 0.0056 - accuracy: 0.9988 - val_loss: 0.0296 - val_accuracy: 0.9899

Epoch 16/100

1875/1875 [==============================] - 11s 6ms/step - loss: 0.0048 - accuracy: 0.9991 - val_loss: 0.0315 - val_accuracy: 0.9905

Epoch 17/100

1875/1875 [==============================] - 11s 6ms/step - loss: 0.0039 - accuracy: 0.9994 - val_loss: 0.0333 - val_accuracy: 0.9901

Epoch 18/100

1875/1875 [==============================] - 12s 6ms/step - loss: 0.0037 - accuracy: 0.9994 - val_loss: 0.0306 - val_accuracy: 0.9915

Epoch 19/100

1875/1875 [==============================] - 11s 6ms/step - loss: 0.0031 - accuracy: 0.9995 - val_loss: 0.0295 - val_accuracy: 0.9912

Epoch 20/100

1875/1875 [==============================] - 11s 6ms/step - loss: 0.0034 - accuracy: 0.9994 - val_loss: 0.0351 - val_accuracy: 0.9898

Epoch 21/100

1875/1875 [==============================] - 11s 6ms/step - loss: 0.0032 - accuracy: 0.9994 - val_loss: 0.0348 - val_accuracy: 0.9905

Epoch 22/100

1875/1875 [==============================] - 11s 6ms/step - loss: 0.0026 - accuracy: 0.9996 - val_loss: 0.0323 - val_accuracy: 0.9900

Epoch 23/100

1875/1875 [==============================] - 11s 6ms/step - loss: 0.0028 - accuracy: 0.9994 - val_loss: 0.0332 - val_accuracy: 0.9905

Epoch 24/100

1875/1875 [==============================] - 11s 6ms/step - loss: 0.0021 - accuracy: 0.9995 - val_loss: 0.0346 - val_accuracy: 0.9895

Epoch 25/100

1875/1875 [==============================] - 11s 6ms/step - loss: 0.0021 - accuracy: 0.9996 - val_loss: 0.0323 - val_accuracy: 0.9907

Epoch 26/100

1875/1875 [==============================] - 11s 6ms/step - loss: 0.0022 - accuracy: 0.9996 - val_loss: 0.0357 - val_accuracy: 0.9894

Epoch 27/100

1875/1875 [==============================] - 11s 6ms/step - loss: 0.0019 - accuracy: 0.9997 - val_loss: 0.0341 - val_accuracy: 0.9902

Epoch 28/100

1875/1875 [==============================] - 11s 6ms/step - loss: 0.0020 - accuracy: 0.9996 - val_loss: 0.0356 - val_accuracy: 0.9895

Epoch 29/100

1875/1875 [==============================] - 11s 6ms/step - loss: 0.0015 - accuracy: 0.9998 - val_loss: 0.0319 - val_accuracy: 0.9917

Epoch 30/100

1875/1875 [==============================] - 11s 6ms/step - loss: 0.0021 - accuracy: 0.9996 - val_loss: 0.0351 - val_accuracy: 0.9910

Epoch 31/100

1875/1875 [==============================] - 11s 6ms/step - loss: 0.0015 - accuracy: 0.9997 - val_loss: 0.0334 - val_accuracy: 0.9915

Epoch 32/100

1875/1875 [==============================] - 11s 6ms/step - loss: 0.0012 - accuracy: 0.9998 - val_loss: 0.0350 - val_accuracy: 0.9912

Epoch 33/100

1875/1875 [==============================] - 11s 6ms/step - loss: 0.0016 - accuracy: 0.9997 - val_loss: 0.0391 - val_accuracy: 0.9903

Epoch 34/100

1875/1875 [==============================] - 11s 6ms/step - loss: 0.0017 - accuracy: 0.9997 - val_loss: 0.0332 - val_accuracy: 0.9913

Epoch 35/100

1875/1875 [==============================] - 11s 6ms/step - loss: 0.0011 - accuracy: 0.9998 - val_loss: 0.0329 - val_accuracy: 0.9906

Epoch 36/100

1875/1875 [==============================] - 11s 6ms/step - loss: 9.7053e-04 - accuracy: 0.9999 - val_loss: 0.0362 - val_accuracy: 0.9909

Epoch 37/100

1875/1875 [==============================] - 11s 6ms/step - loss: 0.0016 - accuracy: 0.9998 - val_loss: 0.0367 - val_accuracy: 0.9905

Epoch 38/100

1875/1875 [==============================] - 11s 6ms/step - loss: 0.0015 - accuracy: 0.9997 - val_loss: 0.0390 - val_accuracy: 0.9898

Epoch 39/100

1875/1875 [==============================] - 11s 6ms/step - loss: 9.9817e-04 - accuracy: 0.9999 - val_loss: 0.0383 - val_accuracy: 0.9904

Epoch 40/100

1875/1875 [==============================] - 11s 6ms/step - loss: 0.0011 - accuracy: 0.9998 - val_loss: 0.0425 - val_accuracy: 0.9895

Epoch 41/100

1875/1875 [==============================] - 11s 6ms/step - loss: 0.0011 - accuracy: 0.9998 - val_loss: 0.0369 - val_accuracy: 0.9909

Epoch 42/100

1875/1875 [==============================] - 11s 6ms/step - loss: 8.2537e-04 - accuracy: 0.9999 - val_loss: 0.0425 - val_accuracy: 0.9906

Epoch 43/100

1875/1875 [==============================] - 11s 6ms/step - loss: 0.0011 - accuracy: 0.9998 - val_loss: 0.0361 - val_accuracy: 0.9906

Epoch 44/100

1875/1875 [==============================] - 11s 6ms/step - loss: 8.0984e-04 - accuracy: 0.9999 - val_loss: 0.0368 - val_accuracy: 0.9906

Epoch 45/100

1875/1875 [==============================] - 11s 6ms/step - loss: 8.2191e-04 - accuracy: 0.9999 - val_loss: 0.0348 - val_accuracy: 0.9915

Epoch 46/100

1875/1875 [==============================] - 11s 6ms/step - loss: 0.0017 - accuracy: 0.9993 - val_loss: 0.0342 - val_accuracy: 0.9912

Epoch 47/100

1875/1875 [==============================] - 11s 6ms/step - loss: 6.0859e-04 - accuracy: 0.9999 - val_loss: 0.0369 - val_accuracy: 0.9913

Epoch 48/100

1875/1875 [==============================] - 11s 6ms/step - loss: 0.0011 - accuracy: 0.9997 - val_loss: 0.0423 - val_accuracy: 0.9909

Epoch 49/100

1875/1875 [==============================] - 11s 6ms/step - loss: 0.0011 - accuracy: 0.9997 - val_loss: 0.0387 - val_accuracy: 0.9904

Epoch 50/100

1875/1875 [==============================] - 11s 6ms/step - loss: 5.4809e-04 - accuracy: 0.9999 - val_loss: 0.0417 - val_accuracy: 0.9898

Epoch 51/100

1875/1875 [==============================] - 11s 6ms/step - loss: 7.9729e-04 - accuracy: 0.9998 - val_loss: 0.0403 - val_accuracy: 0.9908

Epoch 52/100

1875/1875 [==============================] - 11s 6ms/step - loss: 6.9277e-04 - accuracy: 0.9999 - val_loss: 0.0411 - val_accuracy: 0.9908

Epoch 53/100

1875/1875 [==============================] - 11s 6ms/step - loss: 5.0962e-04 - accuracy: 0.9999 - val_loss: 0.0355 - val_accuracy: 0.9923

Epoch 54/100

1875/1875 [==============================] - 11s 6ms/step - loss: 0.0014 - accuracy: 0.9997 - val_loss: 0.0375 - val_accuracy: 0.9913

Epoch 55/100

1875/1875 [==============================] - 11s 6ms/step - loss: 7.5234e-04 - accuracy: 0.9998 - val_loss: 0.0363 - val_accuracy: 0.9917

Epoch 56/100

1875/1875 [==============================] - 11s 6ms/step - loss: 6.4577e-04 - accuracy: 0.9999 - val_loss: 0.0437 - val_accuracy: 0.9906

Epoch 57/100

1875/1875 [==============================] - 11s 6ms/step - loss: 0.0010 - accuracy: 0.9998 - val_loss: 0.0391 - val_accuracy: 0.9916

Epoch 58/100

1875/1875 [==============================] - 11s 6ms/step - loss: 6.7010e-04 - accuracy: 0.9999 - val_loss: 0.0392 - val_accuracy: 0.9917

Epoch 59/100

1875/1875 [==============================] - 11s 6ms/step - loss: 6.5444e-04 - accuracy: 0.9998 - val_loss: 0.0420 - val_accuracy: 0.9901

Epoch 60/100

1875/1875 [==============================] - 11s 6ms/step - loss: 8.1147e-04 - accuracy: 0.9998 - val_loss: 0.0377 - val_accuracy: 0.9913

Epoch 61/100

1875/1875 [==============================] - 11s 6ms/step - loss: 5.5815e-04 - accuracy: 0.9999 - val_loss: 0.0372 - val_accuracy: 0.9920

Epoch 62/100

1875/1875 [==============================] - 11s 6ms/step - loss: 5.0678e-04 - accuracy: 0.9999 - val_loss: 0.0378 - val_accuracy: 0.9912

Epoch 63/100

1875/1875 [==============================] - 11s 6ms/step - loss: 4.5717e-04 - accuracy: 0.9999 - val_loss: 0.0428 - val_accuracy: 0.9901

Epoch 64/100

1875/1875 [==============================] - 11s 6ms/step - loss: 6.4559e-04 - accuracy: 0.9999 - val_loss: 0.0406 - val_accuracy: 0.9909

Epoch 65/100

1875/1875 [==============================] - 11s 6ms/step - loss: 0.0010 - accuracy: 0.9998 - val_loss: 0.0367 - val_accuracy: 0.9911

Epoch 66/100

1875/1875 [==============================] - 11s 6ms/step - loss: 4.6136e-04 - accuracy: 0.9999 - val_loss: 0.0418 - val_accuracy: 0.9903

Epoch 67/100

1875/1875 [==============================] - 11s 6ms/step - loss: 6.4421e-04 - accuracy: 0.9999 - val_loss: 0.0476 - val_accuracy: 0.9888

Epoch 68/100

1875/1875 [==============================] - 11s 6ms/step - loss: 0.0010 - accuracy: 0.9997 - val_loss: 0.0512 - val_accuracy: 0.9881

Epoch 69/100

1875/1875 [==============================] - 12s 6ms/step - loss: 8.2488e-04 - accuracy: 0.9997 - val_loss: 0.0396 - val_accuracy: 0.9912

Epoch 70/100

1875/1875 [==============================] - 11s 6ms/step - loss: 5.2365e-04 - accuracy: 0.9999 - val_loss: 0.0392 - val_accuracy: 0.9907

Epoch 71/100

1875/1875 [==============================] - 11s 6ms/step - loss: 5.9324e-04 - accuracy: 0.9999 - val_loss: 0.0375 - val_accuracy: 0.9910

Epoch 72/100

1875/1875 [==============================] - 11s 6ms/step - loss: 9.1680e-04 - accuracy: 0.9997 - val_loss: 0.0402 - val_accuracy: 0.9905

Epoch 73/100

1875/1875 [==============================] - 11s 6ms/step - loss: 3.9122e-04 - accuracy: 0.9999 - val_loss: 0.0404 - val_accuracy: 0.9910

Epoch 74/100

1875/1875 [==============================] - 11s 6ms/step - loss: 4.6765e-04 - accuracy: 0.9999 - val_loss: 0.0435 - val_accuracy: 0.9906

Epoch 75/100

1875/1875 [==============================] - 11s 6ms/step - loss: 6.5137e-04 - accuracy: 0.9998 - val_loss: 0.0374 - val_accuracy: 0.9915

Epoch 76/100

1875/1875 [==============================] - 11s 6ms/step - loss: 3.7004e-04 - accuracy: 1.0000 - val_loss: 0.0397 - val_accuracy: 0.9905

Epoch 77/100

1875/1875 [==============================] - 11s 6ms/step - loss: 5.1653e-04 - accuracy: 0.9999 - val_loss: 0.0405 - val_accuracy: 0.9911

Epoch 78/100

1875/1875 [==============================] - 11s 6ms/step - loss: 3.3609e-04 - accuracy: 0.9999 - val_loss: 0.0450 - val_accuracy: 0.9898

Epoch 79/100

1875/1875 [==============================] - 11s 6ms/step - loss: 4.6957e-04 - accuracy: 1.0000 - val_loss: 0.0476 - val_accuracy: 0.9898

Epoch 80/100

1875/1875 [==============================] - 11s 6ms/step - loss: 5.5900e-04 - accuracy: 0.9998 - val_loss: 0.0421 - val_accuracy: 0.9909

Epoch 81/100

1875/1875 [==============================] - 11s 6ms/step - loss: 2.7198e-04 - accuracy: 1.0000 - val_loss: 0.0402 - val_accuracy: 0.9911

Epoch 82/100

1875/1875 [==============================] - 11s 6ms/step - loss: 3.0327e-04 - accuracy: 1.0000 - val_loss: 0.0458 - val_accuracy: 0.9898

Epoch 83/100

1875/1875 [==============================] - 11s 6ms/step - loss: 5.9369e-04 - accuracy: 0.9999 - val_loss: 0.0404 - val_accuracy: 0.9912

Epoch 84/100

1875/1875 [==============================] - 11s 6ms/step - loss: 5.2584e-04 - accuracy: 0.9998 - val_loss: 0.0406 - val_accuracy: 0.9913

Epoch 85/100

1875/1875 [==============================] - 12s 6ms/step - loss: 2.9133e-04 - accuracy: 1.0000 - val_loss: 0.0448 - val_accuracy: 0.9906

Epoch 86/100

1875/1875 [==============================] - 11s 6ms/step - loss: 5.6074e-04 - accuracy: 0.9998 - val_loss: 0.0451 - val_accuracy: 0.9899

Epoch 87/100

1875/1875 [==============================] - 11s 6ms/step - loss: 4.9459e-04 - accuracy: 0.9999 - val_loss: 0.0467 - val_accuracy: 0.9907

Epoch 88/100

1875/1875 [==============================] - 11s 6ms/step - loss: 3.9077e-04 - accuracy: 0.9999 - val_loss: 0.0440 - val_accuracy: 0.9902

Epoch 89/100

1875/1875 [==============================] - 11s 6ms/step - loss: 7.2562e-04 - accuracy: 0.9997 - val_loss: 0.0419 - val_accuracy: 0.9911

Epoch 90/100

1875/1875 [==============================] - 11s 6ms/step - loss: 4.4362e-04 - accuracy: 0.9999 - val_loss: 0.0415 - val_accuracy: 0.9912

Epoch 91/100

1875/1875 [==============================] - 11s 6ms/step - loss: 5.0428e-04 - accuracy: 0.9999 - val_loss: 0.0423 - val_accuracy: 0.9909

Epoch 92/100

1875/1875 [==============================] - 11s 6ms/step - loss: 3.0838e-04 - accuracy: 1.0000 - val_loss: 0.0412 - val_accuracy: 0.9913

Epoch 93/100

1875/1875 [==============================] - 11s 6ms/step - loss: 5.0971e-04 - accuracy: 0.9999 - val_loss: 0.0443 - val_accuracy: 0.9907

Epoch 94/100

1875/1875 [==============================] - 11s 6ms/step - loss: 4.6627e-04 - accuracy: 0.9999 - val_loss: 0.0430 - val_accuracy: 0.9911

Epoch 95/100

1875/1875 [==============================] - 11s 6ms/step - loss: 7.2795e-04 - accuracy: 0.9997 - val_loss: 0.0437 - val_accuracy: 0.9911

Epoch 96/100

1875/1875 [==============================] - 11s 6ms/step - loss: 2.4210e-04 - accuracy: 1.0000 - val_loss: 0.0449 - val_accuracy: 0.9908

Epoch 97/100

1875/1875 [==============================] - 11s 6ms/step - loss: 0.0010 - accuracy: 0.9996 - val_loss: 0.0431 - val_accuracy: 0.9910

Epoch 98/100

1875/1875 [==============================] - 11s 6ms/step - loss: 6.8043e-04 - accuracy: 0.9999 - val_loss: 0.0424 - val_accuracy: 0.9906

Epoch 99/100

1875/1875 [==============================] - 11s 6ms/step - loss: 6.8741e-04 - accuracy: 0.9998 - val_loss: 0.0538 - val_accuracy: 0.9901

Epoch 100/100

1875/1875 [==============================] - 11s 6ms/step - loss: 4.4395e-04 - accuracy: 0.9999 - val_loss: 0.0482 - val_accuracy: 0.9896

|

可以看到在引入卷积神经网络后,MINST手写数字识别的准确率最高可以达到99.23%,有了显著的提升。